Chaos Engineering: Why you need it to build resilience for your software offerings

What is Chaos Engineering?

The experimentation with artificial breakdowns in a distributed system in order to enhance the system’s ability to withstand turbulent production conditions.

The term “chaos engineering” may sound like an oxymoron or even the name of an evil force from a sci-fi movie, but it’s actually a prevailing approach that’s making modern technology architectures more resilient.

As you all know, testing during production has been gaining momentum among the DevOps communities to prevent expensive defects beforehand. However, not all possible defects can be foreseen during deployment.

The fact here is that you will see failures during the production phase. The question lies in whether you’ll see those failures as a surprise or use them to test the application’s resilience. The latter approach here is called chaos engineering.

Why Practice Chaos Engineering?

Chaos Engineering is the latest method of software testing to eliminate unpredictability by checking the system’s ability to tolerate unavoidable failures.

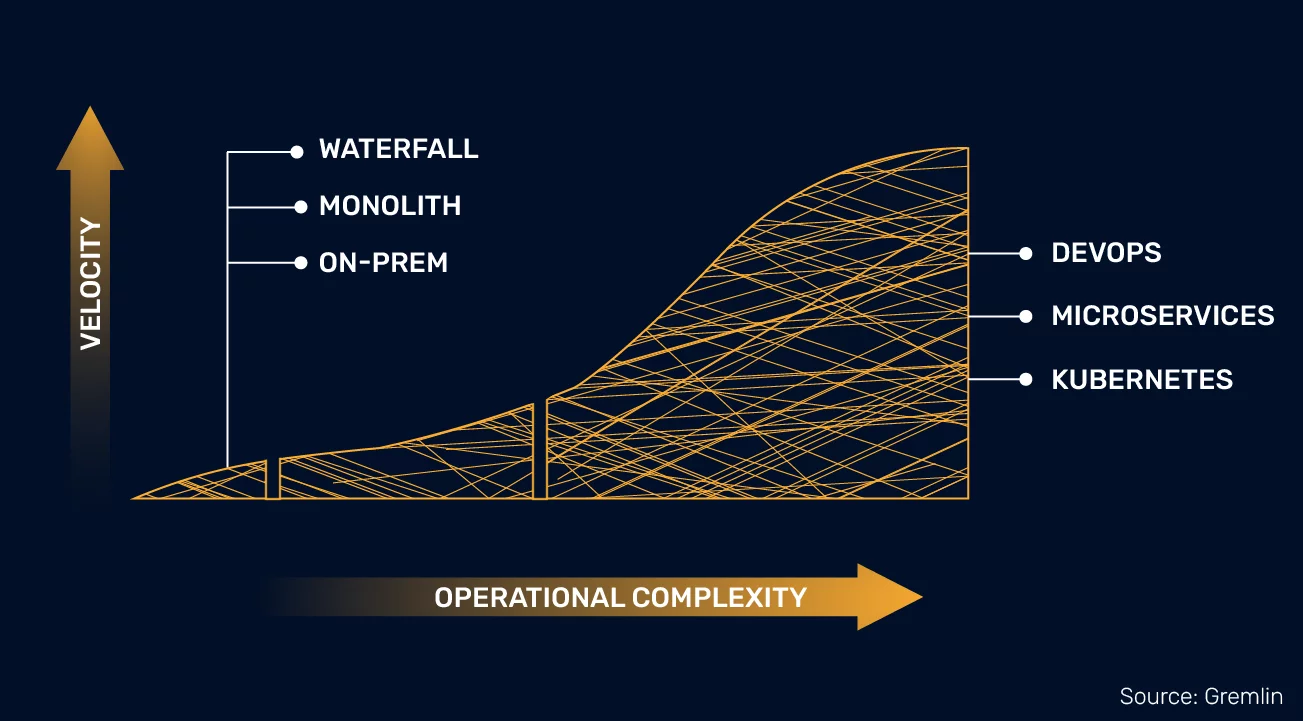

With big-scale organizations beginning to move to cloud-based architectures to build reliable software, the systems are becoming complex. Failures and outages are becoming much harder to detect, ultimately impacting a company’s bottom line. Besides, the shift to DevOps has made the reliability tests much more complex.

For instance, 98% of organizations during 2017 said a cost of an hour of downtime crossed $100,000.

A few years ago, Gartner estimated that outages can cost anywhere between $140,000 to $540,000 per hour for organizations.

The CEO of British Airways recently mentioned that due to downtime in 2017, thousands of passengers were left stranded, which cost the company $102.19 million USD.

As waiting for the next possible outage and rectifying it is not any feasible solution you can swear by, companies started looking for options to meet these challenges way ahead. Then emerged, chaos engineering.

Kolton Andrus, the CEO of Gremlin, a chaos engineering startup, compares chaos testing to a flu shot. Similar to injecting a shot into your body to prevent an ailment, in chaos testing, you infuse harm into the system to check its response. This allows businesses to prepare for outages and minimize the downtime effects.

In technical terms, chaos testing is the process of experimenting on a system to test its capability to withstand inevitable conditions like outages and downtimes during production.

Want to get into the basics? Check out this useful resource for learning chaos engineering.

Chaos Engineering – A Brief Journey

Did you know, your favorite online streaming service, Netflix was the first to start implementing chaos engineering principles?

In 2008, Netflix fell trap to a major database corruption that led to a 3-day-outage, during which the company couldn’t ship any DVDs.

According to Gremlin, post Netflix’s major 3-day outage, the company was in search of alternative architectures, and in 2011, it migrated to AWS cloud-based architecture. Comprising a myriad of microservices, Netflix could prevent single-point failures. Unfortunately, this architecture introduced newer complexities, making the systems faulty.

Learning from their failures, Netflix created Chaos Monkey, after learning chaos engineering principles with the help of its skillful engineers. It is a tool that disables production instances to survive common system failures without having any impact on customers’ viewing experience. With this tool, Netflix’s engineers deliberately injected chaos into their systems to ensure resilience.

Here’s the timeline of evolution that happened in the field of chaos engineering later:

2011

With the success of chaos monkey, Netflix started the Simian Army. It has additional injection modes that allow more comprehensive system testing. Chaos monkey and simian army combinedly ensure reliability, recoverability, security, and resiliency of AWS cloud infrastructure.

2014

Netflix created a new role “Chaos Engineer” and Kolton Andrus, the founder of Gremlin, was working for Netflix. With the help of this team, he started Failure Injection Testing (FIT), a tool built on the guidelines of Simian Army that gave Netflix’s developers more control over the “blast radius”. The Simian Army tools were so effective that they created some hard-to-digest outages, helping developers grow wary of them. This gave them more control over the scope of failure thereby preventing many potential outages in real-time.

2016

Kolton Andrus founded Gremlin, the world’s first chaos engineering enterprise. It offers comprehensive solutions your business needs to build reliable software. In 2018, Gremlin launches the first large-scale conference on chaos testing, for which the number of attendees grew 10X in just 2 years.

2020

Chaos engineering gets added to the reliability sector of the AWS Well-Architected framework. AWS also launches Fault Injection Simulator (FIS), a tool that natively runs chaos experiments on all AWS services.

2021

After its widespread adoption, Gremlin publishes its first chaos engineering report, showing how evolved the practice is among organizations, its benefits and how to effectively perform chaos testing, and more.

Chaos Engineering Examples

As chaos testing ensures system resilience, you can run many experiments depending on the system architecture. Below are a few common examples of injecting chaos usually followed by organizations:

- Exhausting memory on cloud services and allowing fault injection.

- Injecting failure to the entire Availability Zone (AZ).

- Simulating high load on CPU or causing a sudden increase in traffic.

Coming to the real-time examples of chaos engineering, apart from Chaos Monkey, Netflix has built some other tools to actively test their system resilience.

1. Latency Monkey

Creating communication delays to test fault tolerance of service by provoking outages inside the network.

2. Conformity Monkey

It finds instances that don’t adhere to the engineering team’s best practices and sends an email to the owner of the instance to remediate the problem.

3. Doctor Monkey

Checks the health status of individual components (like ETL, storage, CPU load, etc) to remove the instance when an unhealthy event is detected.

4. Janitor Monkey

This tool simply finds trash in the system architecture and deletes it permanently to make sure the cloud service works hassle-free.

5. Security Monkey

It ensures all security measures are up-to-date by updating the teams regarding misconfigured AWS security and also removes the instances that are out of compliance.

6. Chaos Gorilla

It is the upgraded version of chaos monkey and simulates the outage of the entire AZ. Netflix does this to ensure their service load balancers work properly to keep their services running during the events of AZ failure.

Vital Principles Of Chaos Engineering

Chaos engineering principles have first been documented during the creation of chaos monkey by Netflix. The document defines chaos testing as an experiment to uncover a system’s weaknesses.

- Start by deciding the “Normal state” of your system.

- Hypothesize that this normalcy would continue in both the control and experimental groups.

- Introduce real-world variables like server crashes, storage malfunctions.

- Falsify your hypothesis by looking for a change in the “Normal state” in control and experimental groups.

Keep in mind that the more confidence you have in the resilience of your system, the harder it is to disrupt the normal state.

Advanced Principles of Chaos Engineering

After applying the above experiments, if no weaknesses are found, we now have a target to test the system at large. Here are the next set of principles to follow:

Hypothesize around the normal state

Here, you will focus on the measurable output of the system- like error rates, or latency percentages. By focusing on these, the chaos testifies the system’s functioning rather than validating the way it functions.

Choose real-world events

While testing, consider the events according to the potential impact. Make sure to consider events including both hardware and software failures. Any event having the potential to impact the normal state can be considered.

Contain the blast radius

When it comes to running chaos tests during production, there’s a chance of a negative impact on customer experience. The chaos engineer on your team holds the responsibility of minimizing the blast radius to ensure the entire team is ready for incident responses. When the blast radius is contained, any outages or failures won’t harm your customers drastically and give you valuable insights for building robust software.

Benefits Of Chaos Engineering

Chaos testing prevents heavy revenue loss to your business by preventing lengthy outages, thereby helping your business to scale quickly.

There are myriad benefits chaos engineering offers to your business, technical teams, and your customers, including:

Reduced System Downtime

You can swiftly figure out common and repetitive downtimes by injecting chaos into your system. This helps in strengthening the system against failures.

Improved Customer Satisfaction

Chaos engineering prevents service disruption by curbing outages, which in turn improves user experience.

Enhanced Reliability

When you inject chaos into the system, you are basically helping it build resilience. Increased resilience indicates your customers can rely on your system/application for their needs.

Chaos Engineering vs Performance Testing

Simply put, chaos engineering deals with cascading failures while performance testing entails a single round of load testing as a part of the QA cycle.

Developers usually design the systems to be elastic in terms of networking, persistence, and coping up with the load. As we move to the distributed architecture, the thundering herd problem aggravates.

For instance, in the complex cloud architecture, this thundering herd may be a situation where your messaging system can intake any number of messages but processing those messages becomes a bottleneck.

For a situation like this, a load test/performance test would suffice in helping you prepare for the stress. But, what if there’s a linked failure caused due to this to another part of your system that was late to the game? This is when chaos engineering makes an entry.

This linked failure is termed as cascading failure where one fault can trigger multiple failures in other parts. So, in a complex architecture, chaos testing helps you find the potential cascading failures beforehand, so you build robust software.

Learning Chaos Engineering: What’s Next?

From being a tool designed to inject failure within a production environment, chaos engineering is now embraced worldwide by tech communities.

As more businesses start to acknowledge the digital transformation for enhanced customer outcomes, the implementation of complex cloud infrastructures is deemed to soar, and so will the number of outages and failures.

What started with “chaos monkey” has now perpetuated into a mature practice where organizations started spending resources to build their own chaos engineering tools.

The next school of thought should be getting deeper into the hard stuff like planning and remediation rather than breaking things first. Few vendors have already started exploring this space and have come up with alternate terminology for chaos engineering called “continuous verification”. However, there aren’t many players here as most companies use either AWS Fault Injection Simulator or Azure Fault Analysis Service.

Chaos engineering happens all the time as systems fail, sometimes in unprecedented ways leading to disastrous consequences. There’s a lot of evolution imminent in this area as more vendors are coming in, giving it new names and using it for various purposes. Watch this space for all the latest happenings in chaos engineering.

Talk to our product engineering experts to see how we can help you embrace chaos to build resilient products.

“

Behind all the beauty lies madness and chaos.

Tanner Walling

Published Date: 08 December, 2021